Backgrounder Augmented Focus™

Understanding the principles of our unique and patented Augmented Focus technology is easy, when the methods the brain uses to focus on sound inputs are considered.

Understanding the principles of our unique and patented Augmented Focus technology is easy, when the methods the brain uses to focus on sound inputs are considered.

The human brain is always looking for changes in its perceptual environment. In vision, this is called "edge detection". It is our brain's ability of enhancing contrast around edges or silhouettes. This was of great importance {way) back in the days for spotting danger {lion in gras) or finding food in nature.

In hearing, this occurs for example when a sound with more dynamics {e.g. the transition between soft and loud)- is naturally given more attention by the brain. When a person

enters a new environment, he or she initially focuses on the biggest contrasts in both the visual and auditory domains. This helps individuals group information and recognize patterns more efficiently.

One common example where this phenomenon occurs is when people are watching a movie in a theatre. When sound engineers, who have mixed the movie's audio, want to steer the attention of the audience toward the film's dialogue, they add more contrast to the relevant speech sounds to make those signals stand out from the background noise.

A critical part of this mixing process involves control ling the sound of the surroundings, so they don't compete with the important dialogue. This engineering sleight of hand automatically "moves" the less important sounds further away from the moviegoer, while simultaneously bringing the talker of interest closer to them. The stream containing the talker's voice and the sound stream containing less important sounds are processed independently, in their own time and with their respective changes.

The result is what we call an augmented experience, and it is the core of Signia's new platform.

The art of Augmented Focus™:

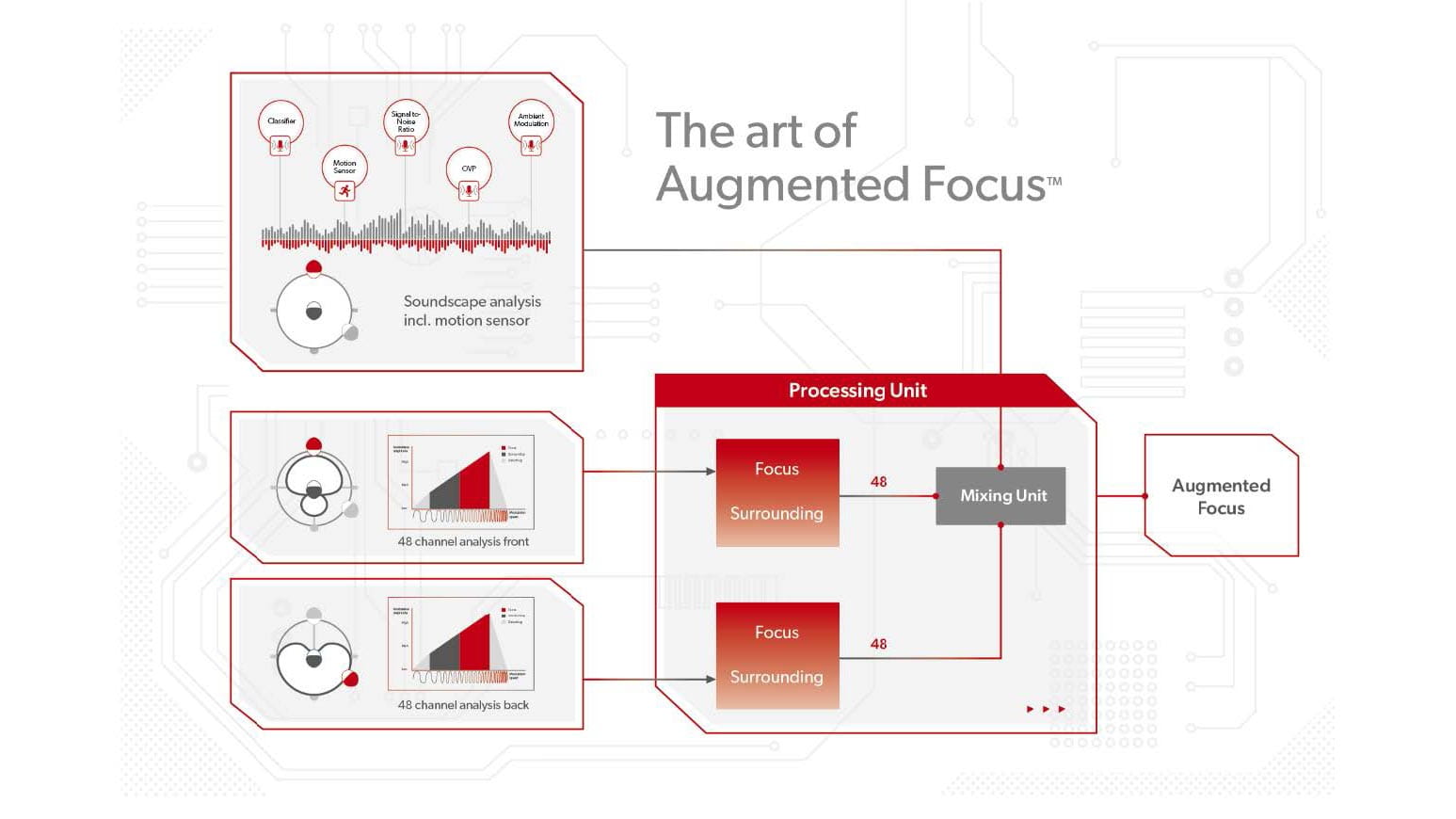

Figure 1 Outline of all relevant processing units in Augmented Focus: Analysis stage; Processing Unit; Mixing Unit.

In this example, the Focus area is to the front. Please note that Focus could be in any direction.

Augmented Focus™ Analysis:

Signia’s unique beamforming technology forms the core of Augmented Focus. The sound input to the hearing aid is split into two signal streams. One stream contains sounds coming from the front of the wearer, while the other stream contains sounds arriving from the back. Both streams are then processed independently.

This means that for each stream, a dedicated processor is used to analyze the characteristics of sound from every direction.

Augmented Focus™ Stream Analysis:

Both processors independently analyze their own sound stream with a resolution of 48 channels. Through this analysis, Augmented Focus determines if the signal contains information the wearer wants to focus on, relevant information in the background that helps the wearer stay attuned to the surroundings, or distracting background sounds the wearer wants to suppress. Additionally, Augmented Focus determines the probability that each of these categories might be present in the stream.

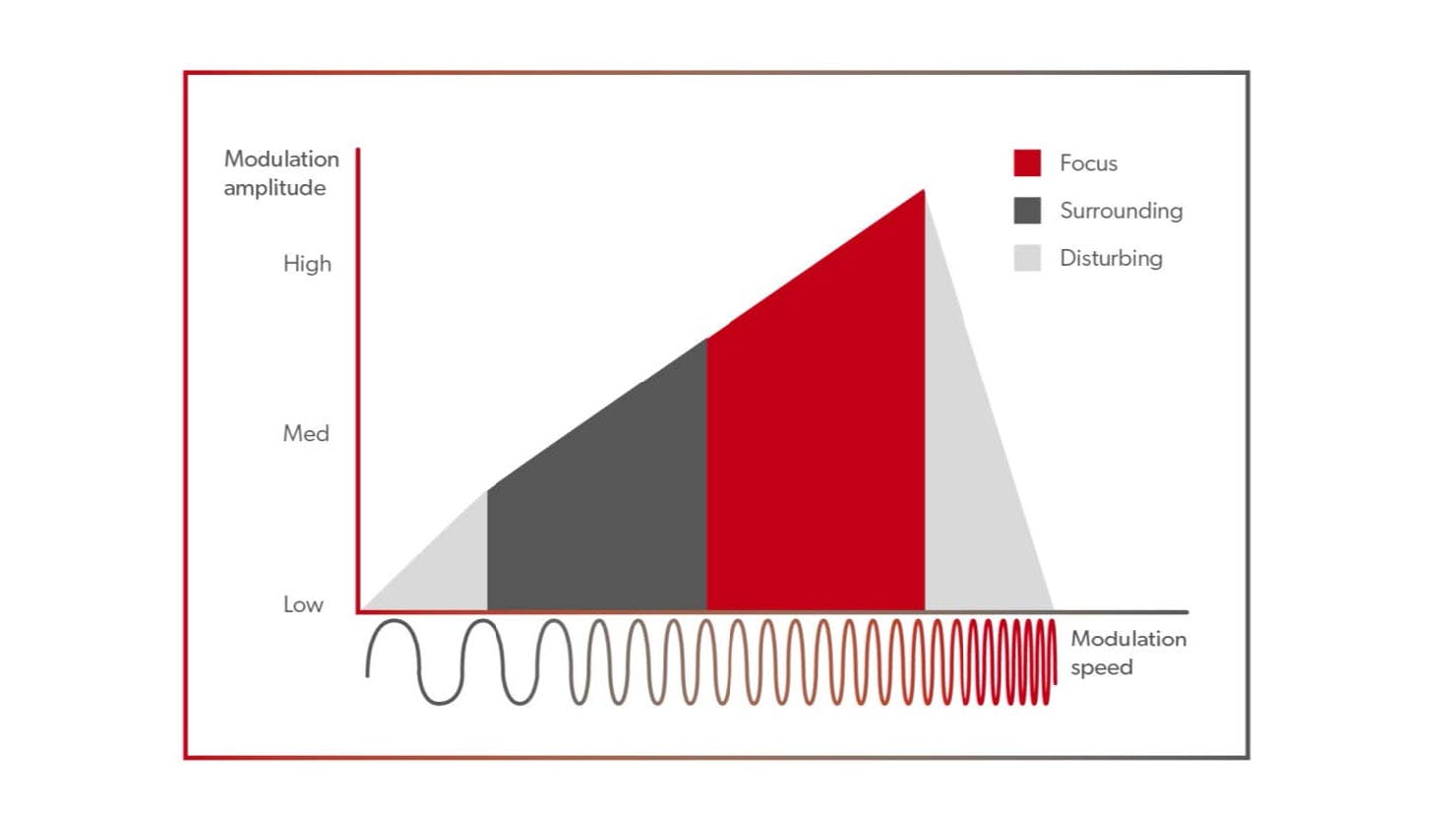

Accordingly, Augmented Focus relies on the speed and the strength of the input signal’s amplitude modulation. Stationary noises with slow and soft modulation (humming, fan noise) are often annoying. Similarly, fast and strongly modulated transients like dish clattering are distracting or annoying as well. Both are recognized and suppressed by both processors within Augmented Focus.

In contrast, relevant information is mostly contained in sound inputs with faster modulations. That is, Augmented Focus distinguishes between different modulation rates of the input signal:

- slow - mostly unwanted sounds like fan noise

- med/low - most of surrounding sounds

- med/fast - what the human brain usually wants to focus on

- fast - typically distracting noise in the surroundings.

Figure 2 illustrates this unique characteristic of Augmented Focus.

Figure 2 Visualization of sound categorization within stream analysis.

Augmented Focus™ Soundscape Analysis:

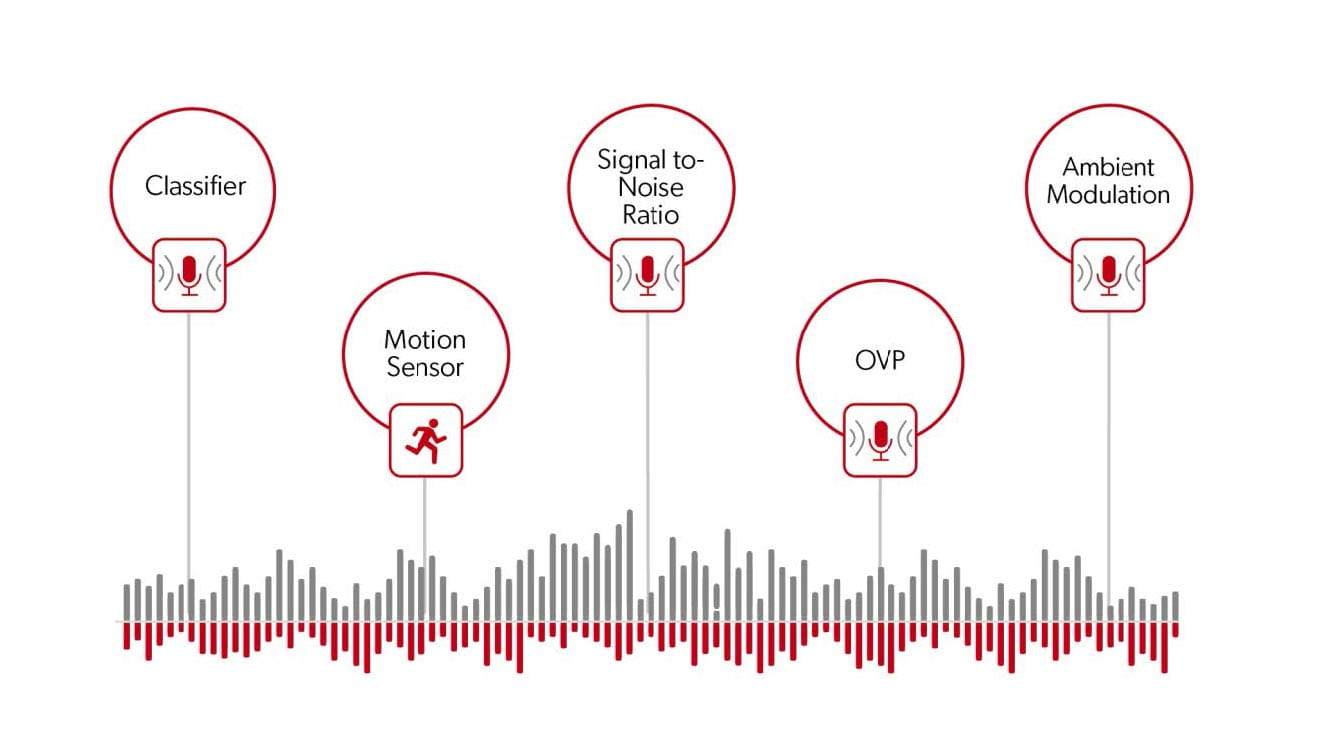

In addition to the separate stream analysis, Augmented Focus maintains the dynamics of the soundscape around the wearer.

To do this, Augmented Focus uses a powerful soundscape processing unit, a carryover from the successful Xperience platform. This two-analysis system (Stream & Soundscape Analysis), operating in tandem, enables Augmented Focus to know exactly what is happening around the hearing aid wearer at all time, regardless of the listening situation. Figure 3 illustrates the essential components of Augmented Focus’ soundscape analysis.

Figure 3 Soundscape Analysis.

Augmented Focus™ Processing and Sound Design:

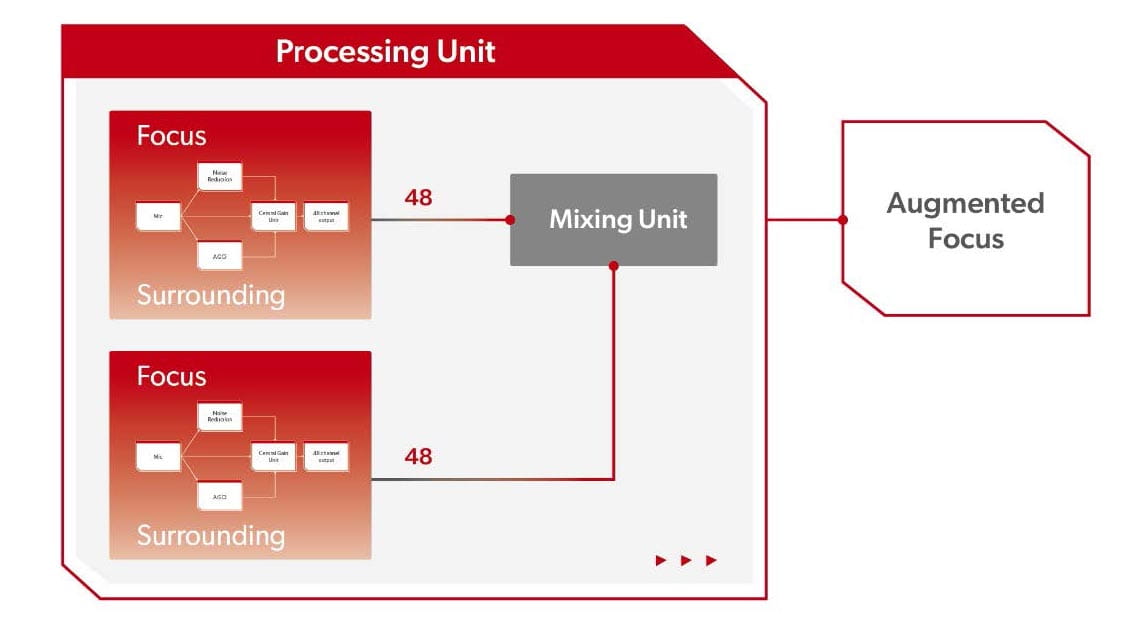

The most remarkable wearer benefit results from this processing strategy: By shaping the two signal streams independently and without compromise and by maintaining a resolution of 48 channels in each stream, Augmented Focus creates a totally unique sound experience for the wearer.

Knowledge of the content of both streams allows Augmented Focus to process the sound in the hearing aid in the same way as a movie sound engineer, as previously described. Similar to the processing in Augmented Focus, the movie sound engineer has access to the different sound streams of the movie (the dialogue, atmosphere and music) and applies different sound design philosophies by acting on each stream independently and combining them in varying ways.

Inside the Augmented Focus Processing stream:

From serial to orchestrated processing

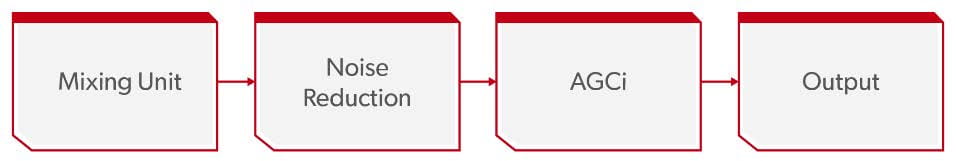

Augmented Focus is a groundbreaking change in the hearing aid’s sound processing architecture. Previously, all hearing aid algorithms were processed one after another in a series, as shown in Figure 4. Now, with Augmented Focus, hearing aid processing is achieved in a much different way.

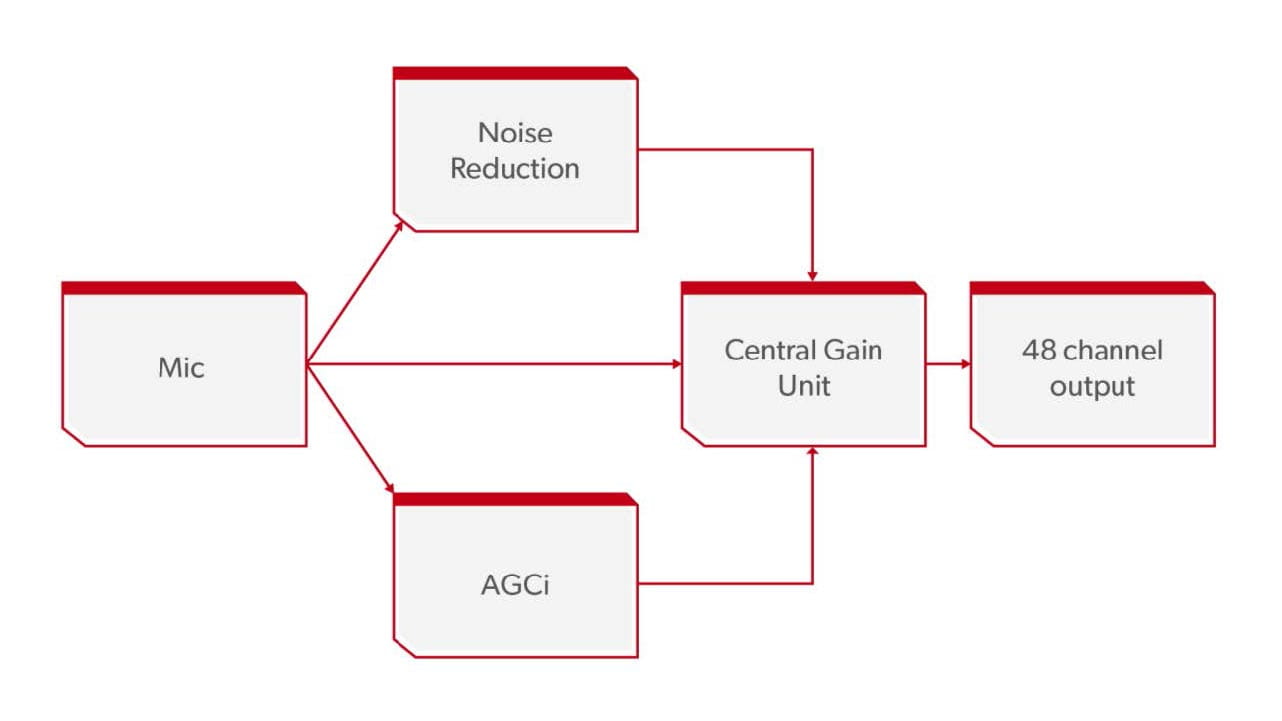

Figure 4 Traditional serial processing chain.

A common problem associated with traditional serial processing is audible artifacts. For example, a noise reduction algorithm may reduce overall gain, but a compression algorithm then analyzes the resulting signal and increases gain again. In extreme cases, this can amplify small artifacts and result in smeared, or unstable sound.

Figure 5 A simplified scheme of orchestrated processing. Central Gain Unit controls all gain changes in 48 channels. (AGCi = Automatic Gain Control for input sound levels).

The processing architecture of Augmented Focus is radically different, as outlined in Figure 5. All algorithms receive the same clean input signal and all processing is performed in parallel. Calculated gain changes are combined in the central gain unit and only applied once. In this way, Augmented Focus avoids artifacts generated by interactions between several algorithms processed in series as shown in Figure 5.

Figure 6 illustrates this parallel processing, unique to Augmented Focus, occurring in both processing streams.

Figure 6 Two processing streams in the processing unit.

New way of directional processing

The combination of the two streams, each using independent gain processing, creates a directional system. If a sudden loud sound appears from behind the wearer, the compression in the back-processing stream attenuates the gain (like any normal compressor). The resulting attenuation creates a directional amplification pattern. The same is true for the noise reduction – if noise is detected in one of the streams it can be attenuated separately, also resulting in a directional amplification pattern.

Essentially, Augmented Focus is a directional compression and noise reduction system. That is, compression and noise reduction can be applied separately for sounds coming from the front as well as inputs coming from the back of the wearer.

Most hearing aids on the market today claim to have directional noise reduction, which is also true of Signia’s former platforms. However, this claim relates to the analysis of the direction of arrival of the noise, not on the application of the noise reduction itself.

It is well-documented that noise reduction algorithms improve a wearer’s listening comfort but offer minimal speech intelligibility benefit. Because noise reduction algorithms change overall gain, they tend to reduce the gain for speech within each channel.

In real world listening conditions, speech signals and background noise often arrive from different directions. Augmented Focus splits the noise and speech signals into two separate streams, and therefore, automatically detects the noise more efficiently in the stream without speech. Then, noise reduction is only applied in this separate stream. Thus, speech inputs are not affected by the noise reduction algorithm and speech intelligibility tends to be markedly improved.

Sound Design

To provide greater contrast between inputs, the two separate streams of Augmented Focus shape sound by adjusting the compression and noise reduction systems independently. This helps the wearer’s brain steer his or her attention toward meaningful sounds, with less long-term listening effort.

By increasing this contrast, Augmented Focus makes the speech signal clearer and ensures speech inputs are perceived as near the listener, in a way that is both natural and comfortable.

Surrounding sounds are shaped so as to enable the listener to “go deep” into the soundscape (e.g. a faraway bike approaching, little birds on the rooftops, children playing far away, oneself sitting in a café enjoying an espresso). This processing feat inside Augmented Focus is accomplished in a highly controlled manner, so as not to disturb the sounds in focus (e.g. the wearer’s companion sitting at the table).

These sound design principles can be summarized as:

Sound in focus stream:

- Clear, crisp, full dynamics, full bodied.

- Details are richly amplified to enhance audibility.

- The sensation of always being nearer to the sound that really matters and with a little more presence than in real life.

Sound in Surrounding stream:

- High spatial resolution.

- Highly controlled dynamics, but as lively as possible.

- Only as much amplification as needed.

- Excellent sound quality.

- The sensation of having a feeling for the world that surrounds us.

Augmented Focus™ Mixing Unit

Once the sound of the two separate streams is processed as previously described, the Augmented Focus Mixing Unit comes into play. Here, Augmented Focus recombines the two 48 channel resolution streams into a single stream, like the sound engineer with a complex mixing console, as depicted in Figure 7.

Figure 7 A typical mixing console in a sound recording studio

The mix of the two streams is not just a simple addition of both streams – it is a complex, crucial step in which the information from all additional sensors on-board the hearing aid is considered.

To achieve the optimal amplified sound mix, similar to the Xperience platform, AX carefully weighs the activity of the wearer. For example, if AX knows the wearer is speaking, it provides the benefits of Own Voice Processing, and if AX sees that the wearer is walking, (as detected by the Acoustic Motion Sensor) it combines the two streams in a way that makes enhanced awareness of the environment possible.

The amplified sound mix also is dependent on the signal to noise ratio (SNR) between the two streams, and the class of the acoustic listening situation detected (either music, car driving, quiet, noisy speech, speech in quiet, or noise alone). In very demanding situations the mixing unit also activates binaural narrow directivity in the front stream and attenuation of the back stream. This strategy provides a speech intelligibility boost that enables some wearers to understand speech better than normal hearing individuals in certain situations.

Augmented Focus™ Benefits

The word “focus” is often used in relation to directional microphone processing in a highly demanding, noisy situation. But AX is different, it enhances sound in any situation without removing entire parts of the soundscape.

Augmented Focus processes the sounds around the wearer in a way that allows the brain to effortlessly and naturally process relevant information. In other words, AX helps wearers focus their attention more easily on meaningful sounds in their listening environment.

We use the word “augmented” deliberately. The word describes how the AX platform splits the wearers soundscape into two separate streams, shapes those two streams and then recombines them into an augmented listening experience. As a result, Augmented Focus brings remarkable sound clarity to any listening situation.

Real life example

With the previous Signia Xperience platform there was a precise analysis of the soundscape, albeit with some limitations. Let’s take the example of a relatively quiet café as pictured in Figure 8. On the surface, this café looks like an easy listening situation.

Figure 8 The initial idea to develop our AX Focus technology started in a café just like this one. Photo: The Barn, Berlin)

A hearing aid’s soundscape analysis would most likely predict that this indeed is an easy, relatively quiet listening situation. However, there may be moments where a group of friends sitting at the bar begin laughing loudly. For this brief moment, this seemingly easy listening situation abruptly changes into a difficult one. For a hearing-impaired listener this could result in missing several words of their companion’s speech and disrupting the flow of an otherwise engaging conversation. Just one fleeting moment makes the entire situation challenging and unpleasant.

Unlike in the Xperience platform, the AX platform immediately classifies the laughter as a sound that could mask the focus area, and instantaneously reduces the laughter to make it comfortable and avoid masking the speech. Simultaneously, but completely independently, the other processor, by applying slightly more linear processing, makes the speech sound crisp and clear. As the name implies, by bringing the speech closer to the wearer, while keeping other sounds at a certain perceived distance, AX provides for an augmented listening experience.

Such seemingly simple situations, like the scenario previously described, can be underestimated in hearing aid research because they are difficult to simulate in the laboratory. In addition, the conversation partner may not always be aware of the difficulties the hearing-impaired listener is facing. The experience of such a brief and unpleasant moment and the knowledge that they may randomly occur, can weaken the wearer’s confidence and result in fear of being perceived as elderly and handicapped.

There are none of these compromises with AX. Because the two streams are processed independently, AX allows for extremely fast adaptation to changes in the wearer’s environment.

Signia AX allows the hearing aid wearer to shine in any environment.

A brief excursion into photography

An analogy for Augmented Focus Technology

Natural or Augmented?

Figure 9 Processed or unprocessed - what would you say? (Photo by Jonathan Petersson from Pexels)

Nowadays, we are used to looking at photos such as Figure 9. Although the photo is highly processed (augmented), to many viewers, it looks like it has been untouched.

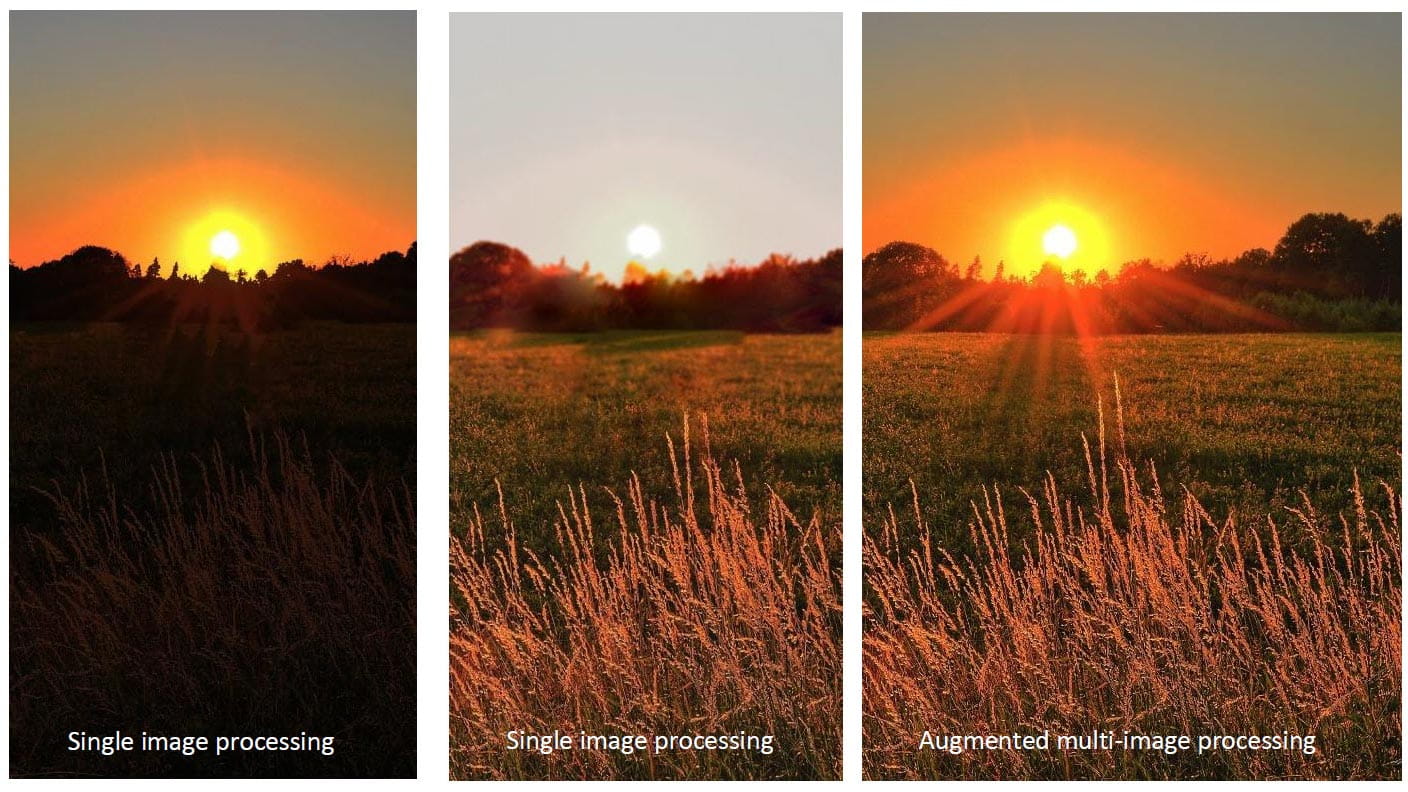

If you take a photograph of a similar scene, the result is often quite disappointing, as shown in Figure 10:

Figure 10 The disappointing result of an unprocessed photo.

In the next attempt, we might try to capture the details of the foreground- at the expense of losing contrast and details in the background of the scene:

Figure 11 An attempt of capturing at least the foreground of the above scenery.

Every sensor, - such as the image sensor in a camera, or the human retina - has a strictly defined dynamic range. If we take only one photo of a scene containing large differences in light levels (i.e. a high dynamic range of the light signal), we need to make a compromise. Either the brighter or the darker parts of the photo will be exposed correctly.

Figure 12 An example of augmented technology in the world of photography.

The solution is to take several photos with different exposure times and then combine them. The process is shown in Figure 12

The hearing aid analogy

In a similar way, the ultimate challenge for every hearing aid is to repackage the dynamics of real-world sound into the limited dynamic range of the individual with hearing loss. With conventional hearing aid processing, as with image processing shown in Figure 9-11, we are often faced with a similar dilemma. In real world listening conditions, soft sounds coexist and intermingle with loud ones. If hearing aids apply just one gain strategy, they are forced to either increase the soft sounds (thereby overamplifying loud sounds) or adjust the gain for the loud sounds (reducing the audibility of soft sounds).

Augmented Focus splits the “picture” (i.e. the sound environment) into two parts and processes each part separately before mixing them back together. Each stream has its own appropriately matched gain, compression and noise reduction.

Summary

Signia Augmented Focus™ is a major leap towards solving the big problem for most hearing aid wearers: Separate what you want to focus on from competing sounds even in complex, fast-changing environments.

Augmented Focus splits focus from surrounding sounds and process them independently.

Each part is fully optimized without any compromise. The result is a clear contrast between the two and a more linear clear speech signal appearing closer to the wearer than the surroundings. At the same time, the surrounding sounds are also fully optimized thereby creating an immersive new sound experience that we are very proud of.

Be Brilliant!